With the increasing use of algorithmic decision-making systems (ADM) in all areas of life, the discussions about a “European approach to AI” are becoming more urgent. Political stakeholders on the German and European levels describe this approach using ideas such as “human centered” systems and “trustworthy AI.” A large number of ethical guidelines for the design of algorithmic systems have been published, with most agreeing on the importance of values such as privacy, justice and transparency.

In April 2019, the Bertelsmann Stiftung published its Algo.Rules, a set of nine principles for the ethical development and use of algorithmic systems. We argued that these criteria should be integrated from the start when developing any system, enabling them to be implemented by design. However, like many initiatives, we are currently facing the challenge of making sure that our principle are actually put into practice.

Making general ethical principles measurable!

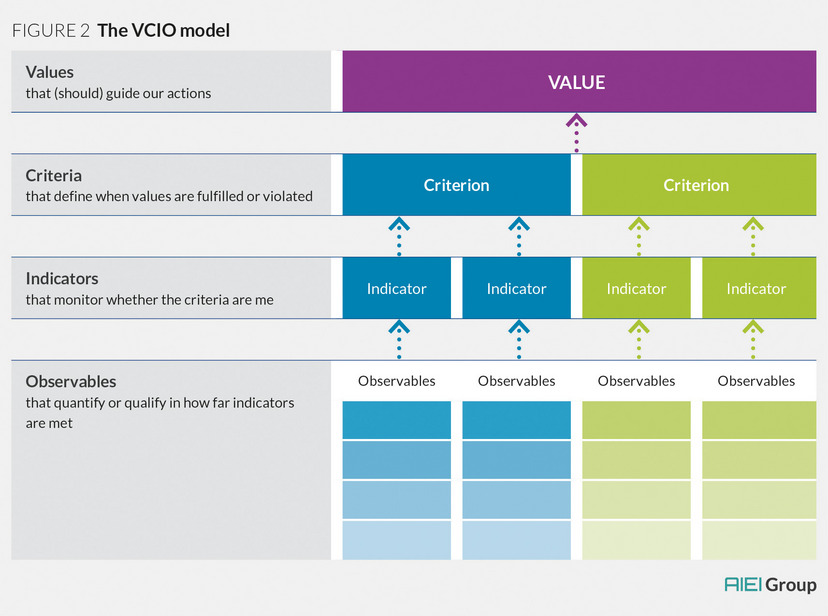

In order to take this next step, general ethical principles need to be made measurable. Currently, values such as transparency and justice are understood in many different ways by different people. This leads to uncertainty within the organizations developing AI systems, and impedes the work of oversight bodies and watchdog organizations. The lack of specific and verifiable principles thereby undermines the effectiveness of ethical guidelines.

In response, the Bertelsmann Stiftung has created the interdisciplinary AI Ethics Impact Group in cooperation with the nonprofit VDE standards-setting organization. With our joint working paper “AI Ethics: From Principles to Practice – An Interdisciplinary Framework to Operationalize AI Ethics,” we seek to bridge this current gap by explaining how AI ethics principles could be operationalized and put into practice on a European scale. The AI Ethics Impact Group includes experts from a broad range of fields, including computer science, philosophy, technology impact assessment, physics, engineering and the social sciences. The working paper was co-authored by scientists from the Algorithmic Accountability Lab of the TU Kaiserslautern, the High-Performance Computing Center Stuttgart (HLRS), the Institute of Technology Assessment and Systems Analysis (ITAS) in Karlsruhe, the Institute for Philosophy of the Technical University Darmstadt, the International Center for Ethics in the Sciences and Humanities (IZEW) at the University of Tübingen, and the Thinktank iRights.Lab, among other institutions.

A proposal for an AI ethics label

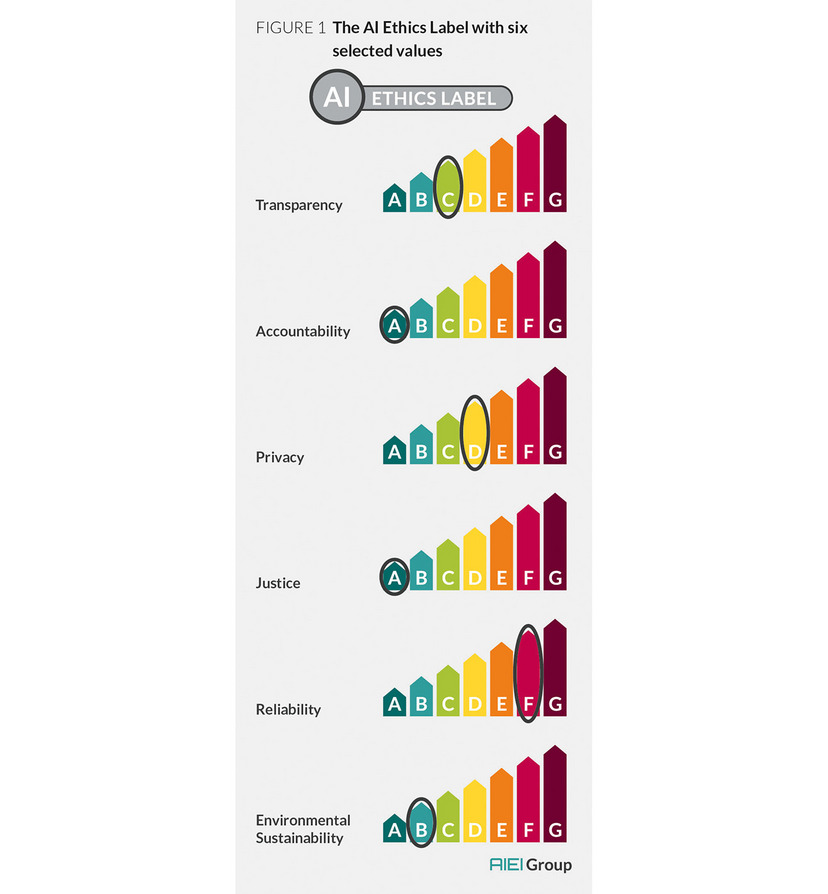

At its core, our working paper proposes the creation of an ethics label for AI systems. In a manner similar to the European Union’s energy-efficiency label for household appliances, such a label could be used by AI developers to communicate the quality of their products. For consumers and organizations planning to use AI, such a label could enhance comparability between available products, allowing quick assessments to be made as to whether a certain system fulfilled the necessary ethical requirements for a given application. Through these mechanisms, the approach could incentivize the ethical development of AI beyond the requirements currently enshrined in law. Based on a meta-analysis of more than 100 AI ethics guidelines, the working paper proposes that transparency, accountability, privacy, justice, reliability and environmental sustainability be established as the six key values receiving ratings under the label system.

The proposed label would not be a simple yes/no seal of quality. Rather, it would provide nuanced ratings of the AI system’s relevant criteria, as illustrated in the graphic below.

![[Translate to English:] Drei verschiedene Messbänder](/fileadmin/files/_processed_/1/3/csm_william-warby-WahfNoqbYnM-unsplash_Original_93109_01_c4a017188b.jpg)